· admin2282 · Blog · 5 min read

Understanding AI in 2025: From Large Language Models to No-Code Automation

The AI Landscape Before the LLM Revolution

Before 2022, artificial intelligence was already part of our daily lives, but more subtly. It manifested through recommendation algorithms on apps like Instagram and Amazon, or in translation tools like DeepL. These systems worked very well but were specialized: each AI was designed for a single specific task, like image recognition.

These tools lacked flexibility and often had little conversational capability. The AI landscape was a collection of high-performing but siloed tools, each operating in its own bubble.

The Turning Point: The Advent of General-Purpose Large Language Models (LLMs)

The arrival of Large Language Models, or LLMs, notably ChatGPT in November 2022, turned everything upside down. LLMs are a bit like a digital version of the National Library of France, which would not only have read all the books but would also be capable of synthesizing them. Thanks to this, AI is no longer perceived as a single-task tool, but as a veritable Swiss Army knife.

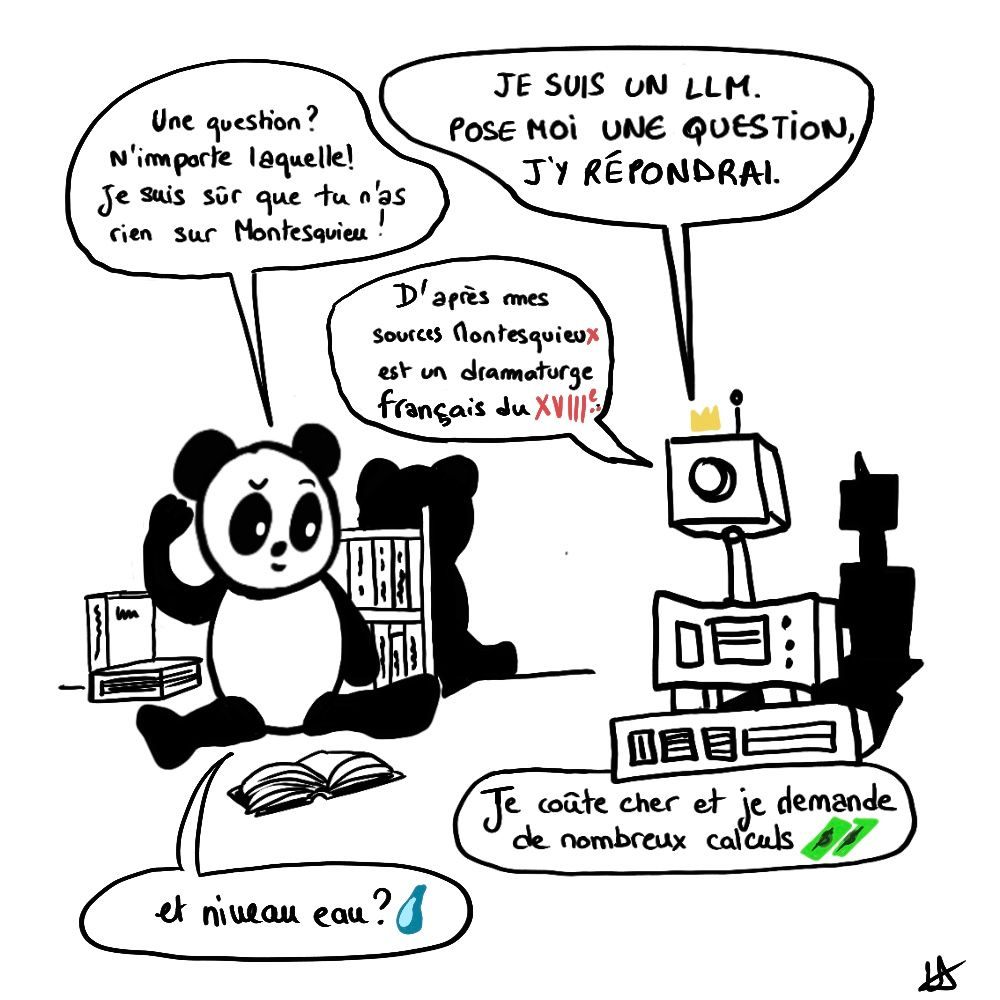

AI is no longer an abstract concept reserved for experts, but a conversational tool accessible to everyone, capable of writing emails, generating code, or summarizing complex documents. However, these generalist models are not perfect:

- They suffer from what are called « hallucinations, » producing information that seems plausible but is actually incorrect.

- Furthermore, their operation requires considerable computing power, which represents a high cost and an obstacle for large-scale adoption.

Specialization and Efficiency: Fine-Tuning, RAG, and SLMs

Faced with the limitations of these generalist models, the trend quickly shifted towards specialization. This is where fine-tuning comes in. The concept is simple: we take a generalist model and train it on a very targeted dataset to make it an expert in that domain. For example, one could imagine a company like Doctolib providing a large amount of health-related questions & answers to produce a model specialized in assisting doctors to simplify their daily tasks. To learn more about fine-tuning, there is this great research paper: https://arxiv.org/html/2408.13296v1 (a good part of it is very accessible).

However, fine-tuning has a major drawback: it is costly and technically complex. This is why an alternative approach has become widely popularized: RAG (Retrieval-Augmented Generation). Instead of re-training a generalist model, we connect it to an external knowledge base that the model can query in real-time. This allows for precise and up-to-date answers without modifying the model itself. A chatbot for your website is a perfect use case for this technique. But be careful, the larger or more poorly structured the knowledge base, the more difficulty the generalist model will have in using the information correctly. This is a very common problem. AWS explains how RAG works here: https://aws.amazon.com/fr/what-is/retrieval-augmented-generation/.

Finally, it is also possible to design lighter models built for efficiency: Small Language Models (SLMs). They do not possess the breadth of knowledge of an LLM, but they excel at specific tasks while consuming far fewer resources. Their low cost and speed make them perfect for applications directly on our devices, like a smartphone, and they guarantee better data privacy since the data doesn’t go through the cloud.

The Rise of Autonomous AI Agents

After the ability to understand and generate text, the next step was to give AI the capacity to act. This is the role of AI agents. The difference with a simple chatbot is fundamental. An AI agent can plan and then execute: it can book train tickets, send emails, and interact with other applications. Frameworks like LangChain or CrewAI can help build these agents.

The Democratization of AI with No-Code Automation

One of the most significant changes in AI in 2025 is undoubtedly its democratization. Thanks to no-code workflow automation, it is no longer necessary to be a developer to create intelligent processes. Platforms like Zapier, make.com, or the open-source alternative n8n allow us to connect AI models to our everyday applications, like Gmail, Slack, or Google Sheets.

Concretely, anyone can now build a workflow that:

- Fetches emails from a mailbox

- Applies some modifications to them

- Transfers the modified email to another recipient

This accessibility is a game-changer, especially for companies without R&D teams, which can now leverage these technologies without spending hundreds of thousands of euros.

Conclusion: AI Becomes an Architect

In 2025, AI is shifting towards an orchestrator role. You no longer just use a model: you create an environment in which this model is deployed, interacts, and integrates with your processes.

The recent work done by the Meta FAIR team in September 2025: « CWM: An Open-Weights LLM for Research on Code generation with World Models » illustrates this shift: rather than simply learning to predict code, this model was trained to model an environment, actions, and effects. The AI no longer just « sees » the code, it « imagines » what it will do, how it will evolve within a system—a major qualitative leap.

For us, the question is no longer « which model » but « which AI ecosystem will I build? »:

- How do I choose between a generalist model or a specialized model?

- How do I link this model to my data or no-code workflows?

- How do I design the agent that will trigger actions, adjust results, and learn from my feedback?

- And of course: how do I implement this before my competitors?

What CWM shows is that the next steps in AI will be less about « augmentation » (adding more size, more parameters) and more about « integration »: from model to agent, from agent to process, from process to the business. You move from a model that answers queries to an agent that anticipates needs, triggers actions, and synchronizes systems.